From Linear Models to Neural Networks

Contents

From Linear Models to Neural Networks#

At their core, neural networks are function approximators composed of layers of interconnected nodes that transform input data through weighted connections and nonlinear activations. Originally proposed in the 1950s with the perceptron, neural networks saw early promise but soon hit limitations due to their inability to solve even simple non-linear tasks.

The resurgence of interest began in the 1980s with the development of backpropagation, enabling multi-layer networks to be efficiently optimized. However, they were soon overshadowed by models like the kernel SVM, which were mathematically elegant, easier to train, and delivered strong performance on small to medium datasets.

Kernel methods offer strong theoretical guarantees and closed-form training objectives, but scale poorly with large datasets and require careful kernel selection. Neural networks can be seen as models that learn the feature transformation, that is implicitly provided by the kernel, themselves. Adapting both the transformation and the decision boundary simultaneously makes neural networks exceptionally powerful in high-dimensional, unstructured domains like images, audio, and text. The breakthrough came in the 2010s, fueled by larger datasets, faster GPUs, and architectural innovations (e.g., convolutional layers, residual connections), enabling deep neural networks (DNNs) to surpass traditional models on a wide range of tasks.

We start now with introducing another linear classifier, that is extended to a deep neural network classifier by a preceeding feature transformation.

Logistic and Softmax Regression#

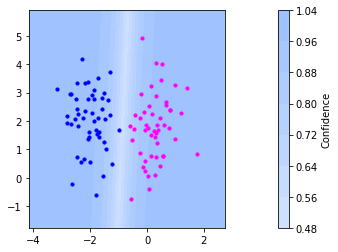

Logistic regression is a binary classification model, that finds a hyperplane separating the classes (like the SVM). In contrast to the SVM, logistic regression uses the distance of a point to the separating hperplane as a confidence measure: the further a point is away from the separating hyperplane,the more confidently it is assigned to the corresponding class. The plot below indicates a separating hyperplane and the confidence assigned by logistic regression. We see that the confidence is low at the decision boundary and getting pretty high quickly when moving away from the decision boundary.

The confidence in logistic regression is modeled by a probability measuring how likely a sample belongs to the positive class.

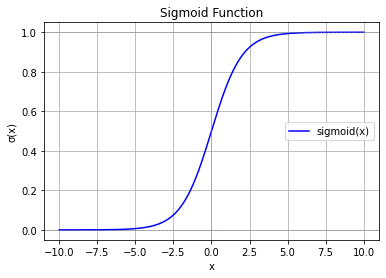

This probability is computed over the sigmoid function

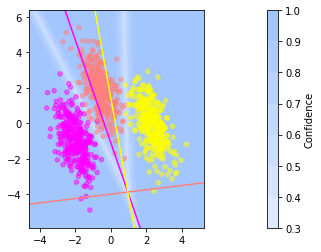

For multiclass classification, the softmax regression generalizes logistic regression to \(c\) classes by learning one hyperplane per class. The more a point lies on the positive side of a hyperplane, the more confidently it is assigned to that class. The confidence is again interpreted as a probability that a sample belongs to a class, which is now computed over the softmax function.

Definition 32 (Softmax Regression)

The softmax regression (a.k.a. multinomial logistic regression) classifier computes the probability that point \(\vvec{x}\) belongs to class \(y\) by means of \(c\) hyperplanes, defined by parameters \(\vvec{w}_l\) and \(b_l\). Gathering the \(c\) hyperplane defining parameters in a matrix \(W\), such that \(W_{l\cdot} = \vvec{w}_l^\top\), and \(\vvec{b}\), then the softmax regression classifier models the probability that point \(\vvec{x}\) belongs to class \(l\) over the parameters \(W\) and \(\vvec{b}\)

The softmax function \(\mathrm{softmax}:\mathbb{R}^d\rightarrow [0,1]^c\), \(\mathrm{softmax}(\vvec{x})_y=\frac{\exp(x_y)}{\sum_{j=1}^c\exp{x_j}}\) returns a vector reflecting the confidences for each class. We can easily show the confidences sum up to one. The plot below show the confidences and the hyperplanes learned for a 3-class classification problem.

# make 3-class dataset for classification

centers = [[-5, 0], [0, 1.5], [5, -1]]

X, y = make_blobs(n_samples=1000, centers=centers, random_state=40)

transformation = [[0.4, 0.2], [-0.4, 1.2]]

X = np.dot(X, transformation)

multi_class = "multinomial"

clf = LogisticRegression(

solver="sag", max_iter=100, random_state=42, multi_class=multi_class

).fit(X, y)

coef = clf.coef_

intercept = clf.intercept_

plot_conf(clf.predict_proba,show_class_assignment=False, x_max =max(X[:,0])+1, y_max =max(X[:,1])+1, x_min =min(X[:,0])-1, y_min =min(X[:,1])-1)

sc = plt.scatter(X[:, 0], X[:, 1], c=y, s=20, alpha=0.5, cmap="spring")

xmin, xmax = plt.xlim()

ymin, ymax = plt.ylim()

def plot_hyperplane(c, color):

def line(x0):

return (-(x0 * coef[c, 0]) - intercept[c]) / coef[c, 1]

plt.plot([xmin, xmax], [line(xmin), line(xmax)], color=color)

#cmap = plt.cm.get_cmap("gist_rainbow")

for k in clf.classes_:

plot_hyperplane(k, sc.to_rgba(k))

plt.show()

Training#

Task (softmax regression)

Given a classification training data set that is sampled i.i.d. \(\mathcal{D}=\{(\vvec{x}_i,y_i)\mid 1\leq i\leq n, y_i\in\{1,\ldots,c\}\}\).

Find the parameters \(\theta=(W,\vvec{b})\) such that the posterior probabilities, that are modeled as

Return the classifier defining parameters \(\theta\).

As discussed in the scope of naive Bayes, computing the product of probabilities is generally not a good idea, because we might run into underflow. Hence, we apply again the log-probabilities trick and minimize the log-probabilities instead of the probabilities directly. We divide by the number of samples in the dataset and apply the logarithm to Eq. (52) and obtain the equivalent objective

Instead of maximizing the logarithmic values, which are negative, we further multiply with minus one and obtain the following equivalent objective, introducing the cross entropy:

Definition 33 (Cross-entropy)

The cross entropy is a function \(CE:\{1,\ldots,c\}\times [0,1]^c\rightarrow \mathbb{R}_+\), mapping a label \(y\in\{1,\ldots,c\}\) and a probability vector \(\vvec{z}\in[0,1]^c\) to the negative logarithm of the probability vector at position \(y\):

Cross entropy is a popular loss for classification tasks, since it penalizes heavily low probability predictions for the correct class. In addition, applying the logarithm to the softmax output dampenes the vanishing gradient effect that the sigmoid and softmax function are suffering from. Hence, applying Cross-Entropy as a loss helps to numerically optimize the softmax output of the softmax regression classifier.

Softmax Regression as a Computational Graph#

The plot below shows a visualization of the softmax regression model as it is common for neural networks. The affine function is visualized by the edges connecting the input layer on the left with the output layer. The output layer has \(c\) nodes, one for each class. Every edge has a weight that is given by the matrix \(W\). For example, the edge from input node \(x_i\) to output class node \(l\) is \(W_{il}\). At each output node, the weighted sum of the edge weights multiplied with the input is computed, the bias term is added and the softmax function is applied.

The output of the final layer before the application of the softmax function are also called the logits. For the simple softmax regression problem, the logits are hence given by the vector \(\vvec{z} = W\vvec{x}+\vvec{b}\).

Representation Learning#

Logistic or Softmax regression rely on linear decision boundaries. In practice, data often lies on complex, nonlinear manifolds. Hence, we apply a similar trick as we have seen in linear regression and the SVM to use a linear model for nonlinear problems by appling a feature transformation first. In regression, the feature transformation is given by the basis functions and in the SVM it is implicitly defined over the kernel. Neural networks learn the feature transformation by stacking various simple functions after one another, whose parameters are optimized jointly with the classifier’s parameters. That is we get something that looks like that:

On the left we see the vector \(\vvec{x}\) being put as input to the graph, producing an intermediate output \(\phi(\vvec{x})\), that is the feature transformation that is classified with a softmax regression, creating the output of the graph. We discuss in the following how we can interpret this graph and how the feature transformation \(\phi\) is computed.