Random Forests

Contents

Random Forests#

The performance of a decision tree depends on its structure, that is determined by a greedy method, for example with the CART algorithm. The greedy selection of the best split tends to overfit on the training data, as greedy methods quickly get lost in details since they operate only on local views of the data. We can overcome the tendency to overfit by choosing an early stopping criterion, but we can do even better by combining various overfitting trees into an ensemble – called a random forest. The disadvantage is that we lose the interpretability of a single tree, but on the other hand, we obtain one of the strongest classifiers to this date.

The theoretical motivation for the random forest is the bias-variance tradeoff for classification (to be discussed in detail in Bias Variance Tradeoff). Similar to the bias-variance tradeoff in regression, a complex model tends to overfit and consequently has a high variance and low bias. In turn, a simple model has a high bias and low variance. If we now train a couple of overfitting decision trees, for example by setting the stopping criteria pretty lax, such that we get big trees, and if we aggregate the results of those trees, then we get an low bias, low variance classifier.

Inference#

Definition 25 (Random Forest)

A Random Forest classifier is an ensemble of \(m\) decision trees \(f_{dt1},\ldots,f_{dtm}\) that aggregates predictions of single trees via a majority vote. Let \(\mathbb{1}(\vvec{p})\in\{0,1\}^c\) denote the one-hot encoded argmax function (also called hardmax), such that \(\mathbb{1}(\vvec{p})_y=1\) if and only if \(y = \argmax_{1\leq l\leq c} p_l\). We define then the random forest classifier as

The inference of a radom forest is a simple majority vote of the decision trees. For any ensemble of classifiers, predicting the class over a majority vote, we can derive an error bound that details which characteristics of the base classifiers make a strong ensemble. The error bound considers each tree the outcome of a random variable \(\omega\), that describes the training set of the tree and other random elements that made the tree how it is.

Theorem 19 (expected prediction error bound)

Let

be the most frequently predicted label that is not the actual label of the random forest. We define the margin function of a random forest as

Proof. If the margin is negative, then the prediction of the random forest is not correct. Hence, we can write that the expected prediction error is the probability that the margin is negative

We can apply Chebychev’s inequality to the probability above. Chebychev’s inequality states that

Hence, the EPE is bounded above by the variance of the margin divided my the strength of the classifier. Since the variance of the margin does not have a good interpretation with regard to a random forest, we derive now a bound for the variance.

We rewrite the margin of the random forest in dependence of the margin of the trees as

where the last equation derives from the linearity of the expected value and the fact that the expected value of binary random variable is equal to the probability that the binary random variable is equal to one. From Eq. (39) follows that the expected value of the tree margin function over \(\omega\) is the margin function \(\mathbb{E}_\omega[m_\omega(\vvec{x},y)]=m(\vvec{x},y)\). We can now write the variance of the margin with respect to single trees as

Since we have for any variable of function \(h_\omega\), and i.i.d. random variables \(\omega\) and \(\hat{\omega}\)

The last equation derives from the fact that the inner expected value subject to \(\omega,\hat{\omega}\) is the covariance of \(m_\omega\) and \(m_{\hat{\omega}}\). The covariance can be expressed as the Pearson correlation coefficient multiplied with the corresponding standard deviations. Dividing and multiplying Eq. (41) with \(\mathbb{E}_\omega[\sigma_\omega]^2 = \mathbb{E}_{\omega,\hat{\omega}}[\sigma(m_\omega) \sigma(m_\hat{\omega})]\) yields

where the last inequality stems from Jenssens inequality applied to the convex function \(x^2\).

We apply again Jenssens inequality and get

Further, we have \(\mathbb{E}_\omega\left[\mathbb{E}_{\vvec{x},y}[m_\omega(\vvec{x},y)^2]\right]\leq 1\) because \(m_\omega(\vvec{x},y)\in\{-1,0,1\}\). As a result, we can bound Eq. (42) as

This concludes our result.

The theorem, published in [1], identifies two properties of the decision trees that bound the EPE of a random forest:

The strength of the random forest classifier \(\mu(m)=\mathbb{E}_{\vvec{x},y}\mathbb{E}_\omega[m_\omega(\vvec{x},y)]\): the higher the expected margin of the decision trees, the better. A high margin indicates that the random forest confidently predicts the correct classes.

The correlation between the trees: the lower the correlation is between trees, the better. Note that the correlation is measured in the prediction confidences of the tree for all classes.

The main implication of the EPE bound is that we do not necessarily need an ensemble of highly accurate (high strength) decision trees to make a strong random forest, but that we can also lower the error bound by learning trees that do not correlate much, but make in average still good predictions. This incentivizes strategies to increase the diversity of trees in a random forest.

Training#

The training procedure of the random forest follows the theoretical motivations for strong ensemble learners. The rough procedure is to sample training data from the given dataset, learn trees that overfit on the dataset samples, and to incorporate further measures to decrease the correlation of trees. For example, by considering for each split only on a sampled subset of the features. In detail, the learning algorithm for random forests look as follows.

Algorithm 10 (Random Forest Training)

Input: training data \(\mathcal{D}\), number of features \(\hat{d}<d\)

\(\mathcal{DT}\gets\emptyset\) # Initialize the set of trees

for \(j\in\{1,\ldots, m\}\)

Draw a bootstrap sample \(\mathcal{D}_j\) from \(\mathcal{D}\)

Train the decision tree \(f_{dtj}\) on \(\mathcal{D}_j\) with the following specifications:

Set as stopping criterion that the minimum number of samples per split is one (train untill maximum depth is reached).

Select for each split randomly \(\hat{d}\) features that are considered for finding the best split

\(\mathcal{DT}\gets \mathcal{DT}\cup\{f_{dtj}\}\)

Random forests use a specific sampling procedure to imitate various training datasets from one given training dataset. The employed sampling technique is called bootstrapping.

Bootstrapping#

Bootstrapping is similar to the dataset division known from cross valiadation, only that in bootstrapping the generated datasets overlap, that is, various data points are present in multiple dataset samples.

Definition 26 (Bootstrapping)

Given a dataset \(\mathcal{D}=\{(\vvec{x}_i,y_i)\mid 1\leq i\leq n\}\) containing \(n\) datapoints. A bootstrap sample \(\mathcal{D}_j=\{(\vvec{x}_{i_b},y_{i_b})\mid 1\leq b\leq n\}\subseteq\mathcal{D}\) gathers \(n\) uniformly distributed samples (\(i_b \sim\mathcal{U}\{1, \dots, n\}\)) from \(\mathcal{D}\). Hence, \(\mathcal{D}_j\) may contain various duplicates of data points.

Note

It’s common to define datasets as mathematical sets, as we have done in this book. However, a mathematical set has no duplicates in general. Datasets on the other hand may contain duplicate data points and in bootstrapping samples this is most often the case. Hence, in order to not get confused, you can also imagine a dataset as a set of thrupels \((i,\vvec{x}_i,y_i)\) where \(i\) is an index that is uniquely assigned to each datapoint. We just omit the index from our dataset notation to shorten it.

Let’s write a simple example demonstrating what bootstrap samples look like:

import numpy as np

# Original dataset

data = np.array([1, 2, 3, 4, 5])

n = len(data)

# Number of bootstrap samples

m = 5 # feel free to increase this

print("Original data D", data)

print("\nBootstrap samples:")

for j in range(m):

# Sample with replacement

bootstrap_sample = np.random.choice(data, size=n, replace=True)

print(f"D{j+1}: {bootstrap_sample}")

Original data D [1 2 3 4 5]

Bootstrap samples:

D1: [5 1 1 2 2]

D2: [2 2 3 3 1]

D3: [3 1 5 4 3]

D4: [1 2 5 2 1]

D5: [1 2 3 2 3]

We see how some data points are duplicates in the bootstrap samples and other are not occurring at all. A data point is not chosen for a bootstrap sample with probability \((1-\frac1n)^n\) (\(n\) times, the data point hasn’t been selected, which has a probability of \(1-\frac1n\)). If \(n\rightarrow \infty\) we have \((1-\frac1n)^n\rightarrow \exp(-1)\approx 1/3\). Hence, very roughly one third of all datapoints is missing from one bootstrap sample (in a big dataset).

Out Of Bag Error Estimate#

Although random forests are generally very robust, they can be somewhat sensitive to setting the parameter \(\hat{d}\). Considering only a small subset of variables for each split reduces correlation (good) but can also reduce the strength of the trees (bad). In contrast, if you choose a high number of variables considered in each split, then the correlation is probably high (bad), but the strength might be increased (good). The number of features can be determined in random forests by means of the Out Of Bag (OOB) error estimate, which works similar to cross validation.

Each bootstrap dataset \(\mathcal{D}_j\) generates a test set \(\mathcal{T}_j=\mathcal{D}\setminus \mathcal{D}_j\) containing those datapoints the tree hasn’t been trained on. Similarly to cross-validation, we can use the repeated splits into train and testset to obtain a fairly good estimate of the EPE. The Out Of Bag (OOB) error rate averages the random forest predictions, using for each prediction only those trees that haven’t seen the data point in training:

Using the OOB, instead of cross-validation, is favorable for decision trees, since it makes efficient use of the created dataset splits during random forest training.

Decision boundary#

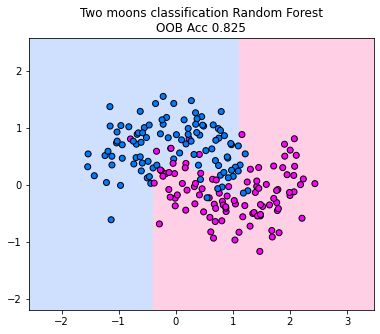

We plot the decision boundary of a random forest for the two moons classification dataset. Below, we train 10 decision trees, considering only one randomly sampled feature for each split (since the dimensionality of the data is two, we can’t go higher if we want to restrict the number of features considered for each split). We observe how the majority vote between the trees fracture the decision boundary in the area between the moons. The decision boundary indicates to us that the random forest is able to capture much more complex decision boundaries than a single tree. However, in this case, we do observe some overfitting areas where the decision boundary is adapting a lot to the points that reach into the other class. However, if you increase the number of trees in the forest, this behavior diminishes and the decision boundary becomes more smooth (in tendency).

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

import matplotlib.pyplot as plt

from sklearn.inspection import DecisionBoundaryDisplay

from matplotlib.colors import ListedColormap, LinearSegmentedColormap

from sklearn.datasets import make_moons

X,y = make_moons(noise=0.3, random_state=0, n_samples=200)

_, ax = plt.subplots(ncols=1, figsize=(12, 5))

cm = ListedColormap(["#a0c3ff", "#ffa1cf"])

cm_points = ListedColormap(["#007bff", "magenta"])

rf = RandomForestClassifier(max_depth=None, n_estimators=10, max_features=1,oob_score=True, random_state=42)

y_pred = rf.fit(X, y)

disp = DecisionBoundaryDisplay.from_estimator(

rf,

X,

response_method="predict",

plot_method="pcolormesh",

shading="auto",

alpha=0.5,

ax=ax,

cmap=cm,

)

scatter = disp.ax_.scatter(X[:, 0], X[:, 1], c=y, edgecolors="k",cmap = cm_points)

_ = disp.ax_.set_title(

f"Two moons classification Random Forest\nOOB Acc {rf.oob_score_}"

)

ax.axis('scaled')

plt.show()