k-Means is MF

Contents

k-Means is MF#

In the previous section, we introduced the k-means algorithm and saw how it minimizes an objective that captures the within-cluster scatter. But this raises several natural questions:

How good is this algorithm?

Does it always converge?

Is it just a heuristic, or can we derive more general guarantees?

To answer these, we now take a step back and view k-means through the lens of the more general and powerful concept of matrix factorization. This perspective not only deepens our understanding of how k-means works, but also connects it to a broader class of unsupervised learning methods, such as truncated SVD and PCA.

Reformulating k-means as MF#

Representing Cluster Assignments as a Binary Matrix#

We begin by formalizing the cluster assignments using a binary indicator matrix.

Let \(Y \in \{0,1\}^{n \times r}\) be a matrix such that:

This matrix has exactly one non-zero entry per row, corresponding to each point being assigned to exactly one cluster. That is:

We denote by \( \mathbb{1}^{n \times r} \) the set of all such valid cluster indicator matrices:

The Centroid Matrix#

Next, we derive the matrix of cluster centroids in terms of \(Y \). Suppose we are given a data matrix \(D \in \mathbb{R}^{n \times d}\), where each row \(D_{i\cdot}\) represents a data point. The centroid of cluster \(\mathcal{C}_s\) is the mean of all points assigned to it:

We can collect all \(r\) centroids into a single matrix \(X \in \mathbb{R}^{d \times r}\), where each column \( X_{\cdot s}\) is the centroid of cluster \(s\). In matrix form, this becomes:

This formula expresses the centroids as a matrix product of the data and the cluster assignments — revealing a key insight: k-means clustering can be seen as a matrix factorization problem.

The k-means objective as MF#

Theorem 34 (\(k\)-means MF objective)

The \(k\)- means objective in Eq. (68) is equivalent to

Proof. The matrix \(Y\) is a cluster-indicator matrix, indicating a partition of the \(n\) data points into \(r\) sets. For every data point with index \(i\in\{1,\ldots,n\}\), there exists one cluster index \(s_i\), such that \(Y_{i s_i}=1\) and \(Y_{i s}=0\) for \(s\neq s_i\) (point \(i\) is assigned to cluster \(s_i\)). Using this notation, the objective function in Eq. (69), returning the distance of every point to its cluster centroid, is equal to

The third equation uses the composition of the squared Frobenius norm as a sum of the squared Euclidean vector norm over all rows.

The matrix factorization of k-means has a very specific structure. The left matrix \(Y\) is binary and every row contains exactly one entry being equal to one, and all the others are zero. That means, we can sort the rows of a data matrix, such that all points in one cluster have consecutive indices. The resulting matrix \(Y\) looks as the left matrix below, there is one block of ones (black) in the first column, representing the first cluster, and then follows a block of ones in the second row, representing the second cluster and so on. The right matrix \(X^\top\) indicates the centroids as the rows, each centroid has here its own color. The data matrix is then approximated over these centroids, as displayed on the right.

\(=\)

Theorem 35

The k-means objective is nonconvex.

Proof. If we consider the penalized version of the k-means objective (putting the constraints in the main objective), then we get the following objective:

This objective has a local minimum for every feasible \(Y\), and inbetween the feasible points the objective returns infinity.

Example 22 (k-means matrix factorization for recommender systems)

Since k-means is an instance of the low-rank matrix factorization universe, we can compare the factorization of k-means in the scope of the recommender example, that we used to motivate low-rank matrix factorizations. We compute a clustering of the neutral-rating imputed running example of the recommender systems section and obtain the following:

The movie patterns are now given by the centroids, and every user belongs to exactly one movie pattern, indicates by the user pattern matrix \(Y\). For example, the first user is assigned to movie pattern 1:

The k-means assignment scheme allows for a clear interpretation of the movie patterns. Each pattern indicates one user preference scheme and can hence be analyzed by itself, and not in dependence to the coefficients in \(Y\) and the other movie patterns. The example above indicates two behaviours: users that like movie one and two but not so much the other movies, and users that like movie 3.

The clustering above is obtained via sklearn:

M = [[5,3,1,1],[2,1,5,3],[2,1,5,3],[4,3,4,2],[5,5,3,1],[3,1,5,3]]

from sklearn.cluster import KMeans

kmeans = KMeans(n_clusters=2)

kmeans.fit(M)

The clustering can be obtained by kmeans.labels_ and kmeans.cluster_centers_. Sklearn labels are nominally encoded, that is, the label indicates the assigned cluster by a number. Since we have a nonconvex problem, it’s not guaranteed that you get the exact same clustering solution as above. As an exercise, you can try the code above and get the matrix factorization that is indicated by the clustering.

Convergence Analysis#

Ok, so \(k\)-means is an instance of the rank-\(r\) matrix factorization problem. Can we also characterize the global minimizers of \(k\)-means like we did it for the rank-r matrix factorization problem with truncated SVD? Unfortunately not. However, we can characterize the global minimizers of the objective when we fix one of the factor matrices.

Theorem 36 (Centroids as minimizers)

Given \(D\in\mathbb{R}^{n\times d}\) and \(Y\in\mathbb{1}^{n\times r}\), the minimizer of the optimization problem

is given by the centroid matrix \(X=D^\top Y(Y^\top Y)^{-1}\).

Proof. Show that the objective in Eq.~\eqref{eq:minX} is convex. The minimizer is then given by the stationary point:

Theorem 37 (Nearest centroid assignments as minimizers)

Given \(D\in\mathbb{R}^{n\times d}\) and \(X\in\mathbb{R}^{d\times r}\), the minimizer of the optimization problem

is the matrix, assigning every point to the nearest centroid:

Proof. Follows from the \(k\)-means centroid objective:

Corollary 6

Lloyds’ algorithm performs an alternating minimization (also called block coordinate descent):

The sequence \(\{(X_k,Y_k)\}\) converges, since we decrease the objective function value in every step:

Theorem 38 (Equivalent \(k\)-means objectives)

The following objectives are equivalent

Implementation#

import numpy as np

def RSS(D,X,Y):

return np.sum((D- Y@X.T)**2)

def getY(labels):

Y = np.zeros((len(labels), max(labels)+1))

for i in range(0, len(labels)):

Y[i, labels[i]] = 1

return Y

def update_centroid(D,Y):

cluster_sizes = np.diag(Y.T@Y).copy()

cluster_sizes[cluster_sizes==0]=1

return D.T@Y/cluster_sizes

def update_assignment(D,X):

dist = np.sum(D**2,1).reshape(-1,1) - 2* D@X + np.sum(X**2,0)

labels = np.argmin(dist,1)

return getY(labels)

def kmeans(D,r, X_init, t_max=10000):

rss_old,t = 0,0

X = X_init

Y = update_assignment(D,X)

#Looping as long as the clustering still changes

while rss_old != RSS(D,X,Y) and t < t_max-1:

rss_old = RSS(D,X,Y)

X = update_centroid(D,Y)

Y = update_assignment(D,X)

t+=1

return X,Y

from sklearn import datasets

n=500

r =3

epsilon = 0.1

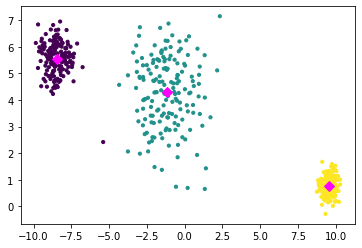

D, labels = datasets.make_blobs(n_samples=n,centers=3, cluster_std=[epsilon + 0.5, epsilon + 1.25, epsilon + 0.25],random_state=7)

X_init = np.array([[-4,1,0.5],[0,-4,1.5]])# inital centroids

X,Y = kmeans(D,r,X_init)

import matplotlib.pyplot as plt

fig = plt.figure()

plt.scatter(D[:, 0], D[:, 1], c=np.nonzero(Y)[1], s=10)

plt.scatter(X.T[:, 0], X.T[:, 1], c='magenta', s=50, marker = 'D')

plt.show()

Initialization#

The \(k\)-means problem is NP-hard, while SVD is polynomially solvable. The simple addition of binary constraints changes the complexity of the problem a lot! Although Lloyds algorithm follows a sound optimization scheme, we can observe that the k-means clustering is complex from its sensitivity to the initialization.

n=500

r =3

epsilon = 0.1

D, labels = datasets.make_blobs(n_samples=n,centers=3, cluster_std=[epsilon + 0.5, epsilon + 1.25, epsilon + 0.25],random_state=1)